What are The Basics of Log Management?

A log is a file created by a computer that records activities within an operating system or particular software program. Any information created by the system administrators, including messages, problem reports, file requests, and file transfers, is automatically recorded in the log file. Additionally, the action is timestamped, which aids developers and IT specialists in comprehending both what happened and when it happened.

Log management implementations are mostly motivated by security worries, network and system operations (such as system or network administration), and regulatory compliance. Almost every computing device produces logs, which can frequently be directed to various destinations on a local file system or distant system.

Logging can generate technical data useful for maintaining websites or apps. Logging is helpful for the following cases:

-

to determine if a bug that has been reported is truly a bug

-

to identify, replicate, and fix issues

-

to assist in the early testing of new features

You can find responses to the following queries in this article, which will provide a more comprehensive view of log management:

-

What is Log Management?

-

What is Centralized Log Management?

-

Why Do You Need Log Management?

-

What are the types of log data?

-

What are the Stages of the Log Management Life Cycle?

-

What are the Advantages of Log Management?

-

What are the Challenges of Log Management?

-

What are Log Management Tools?

-

What is the Difference Between System Logs and Audit Logs?

-

What is the Difference Between a Log Management System and SIEM?

-

What are the Best Practices for Log Management?

-

How to Manage Zenarmor logs?

What is Log Management?

Organizations produce enormous amounts of log data and events from applications, networks, systems, and users; as a result, a methodical procedure is needed to manage and keep track of different types of data spread among log files. Log management is the process of routinely collecting, storing, processing, synthesizing, and analyzing data from various programs and applications in order to improve compliance, uncover technical problems, and optimize system performance.

A log is really the automatically created and timestamped documentation of occurrences pertinent to a certain system in a computing context. Log files are generated by almost all software programs and systems.

For compliance and security, effective log management is crucial. One of the most important aspects of security intelligence is the monitoring, documentation, and analysis of system occurrences. In terms of compliance, laws like the Gramm-Leach-Bliley Act, the Sarbanes-Oxley Act, and the Health Insurance Portability and Accountability Act (HIPAA) have specific requirements relating to audit logs.

Many of the operations are automated by log management software. For instance, an event log manager monitors modifications to an organization's IT infrastructure. The audit trails that must be provided for a compliance audit reflect these modifications.

What is Centralized Log Management?

In centralized logging, logs from networks, infrastructure, and applications are gathered in one place for storage and analysis. Centralized logging gives managers a consolidated view of all network activity, making it simpler to find and fix problems.

Before the business can produce actionable insights, all inputs must be combined and standardized because data originates from a range of sources, including the OS, apps, servers, and hosts. The application of data throughout the organization may happen faster and with less effort thanks to centralization.

Following that, the combined data is shown on a centralized, user-friendly interface. A Centralized Log Management System, or CLM system, was created primarily to make the life of an internal security team easier by reducing the tedious task of manually sorting through a mountain of log data.

Why Do You Need Log Management?

Every day, IT infrastructures produce enormous amounts of logs. Take into account that every single second, each of the following produces event logs:

-

Devices: workstations, servers, routers, and switches

-

Networks: incoming and egressive traffic

-

Users: successful and unsuccessful logins

-

Applications: Operation success or failure

For instance, a program sends an event log each time an operation is successfully completed. If you compound each successful operation by the number of people and applications you have, it becomes overwhelming.

Everything that creates an event log in a complicated IT environment is interdependent. You won't learn everything you need to know from looking at one form of an event log. You need to be able to correlate the occurrences and have access to information from wherever you are.

Log management provides you with the transparency you need to lessen the noise this information creates. Enabling real-time insights into system health and operations, an effective log management system, and strategy. An efficient log management solution offers the following capabilities:

-

Centralized log aggregation for unified data storage

-

A smaller attack surface, real-time monitoring, and quicker detection and response times all contribute to increased security.

-

Enhanced enterprise-wide observability and visibility thanks to a shared event log

-

Predictive modeling and log data analysis improved customer experience

-

Enhanced network analytics capabilities for quicker and more accurate troubleshooting

What are the Types of Log Data?

In comparison to other data sources, data logs like file auditing, DLP, or file integrity auditing are less valuable to security operations and can be difficult to integrate successfully. Event data produced by the following network hardware, network services, security hardware, and software aids in the detection of cutting-edge threats:

- Web Servers: IIS, Apache, Tomcat

- Sources of Authentication: LDAP, AD, SSO, VPN, and two-factor

- Networking hardware: switches and routers

- DHCP, NAT, and other network functions

- Network sensors like Bro, Npulse, and Extrahop

- Firewalls, IPS, DLP, and NAC (Network Access Controls).

- Applications: ERP, CRM, and web-based programs

- Email: Security events, filtering, and server transaction events

- Threat Detection Technologies

- Internet hardware: routers, switches, and VPNs

- Web Proxy

- Endpoint protection: Bit9, HIPS, and antivirus

- Log Aggregators such as Splunk, Q1, Rsyslog, ArcSight, RSA Envision, and Estreamer are a few.

There are main four categories of log data that every IT team should monitor out of these and from among those. This covers the logs an administrator should be keeping, the importance of focusing on those logs, and the specific security issues to watch out for. After setting up a log management solution, the system administrator waits as the logs come in before asking, "Ok, so now what?" Always start with the fundamentals.

The primary types of log data are outlined below:

-

Failed Login Attempts: If nothing else, you should be keeping an eye on and archiving these logs for compliance purposes. For example, unsuccessful login attempts are warning signs that something is off. Most often, failed login attempts are harmless. However, if numerous login attempts fail in a short period of time, this can be a sign that an attacker is attempting to access the system.

-

Intrusion detection systems and firewalls: Logs from security technologies like firewalls and intrusion detection systems can provide a wealth of information about the security and general health of security systems within a company. Logs are a basic requirement because your firewall serves as the first line of defense against external attacks. Of all, today's most sophisticated persistent attacks can completely bypass the firewall, and even antivirus software can't detect every instance of harmful activity.

If your industry rules require information to be kept on hand in case of an audit or data breach, you should keep this data on hand for at least a few weeks for your records. Memory and storage are inexpensive when managing this log data. It does not, however, come without cost.

-

Routers and switches: Log data is provided by all common network devices. Even though this kind of log data may appear unimportant, you still need to keep an eye on it. You must be able to track every piece of data that travels through your organization, from servers to switches and routers to firewalls. Monitoring configuration changes on these kinds of network devices is crucial. Changes in configurations can definitively demonstrate whether sysadmins are performing their duties or not.

If you've changed control policies and they're not adhering to them, and if changes are happening on switches and routers that you either didn't authorize or weren't aware of, it may indicate that either dishonesty or improper behavior or both is taking place.

These modifications may be disastrous. For instance, if a device's configuration update caused the network to go down and there wasn't a simple way to return to a previous state, you would be in trouble.

-

Application Logs: While some application logs use the Windows application log area, others can have extensive logging capabilities of their own. Microsoft permits numerous applications to leverage the Windows log infrastructure.

An excellent illustration is when applications process protected medical data. The app logs frequently provide a lot of the same information as the Windows logs. Let's assume that the system has its own user database and such. It will display logins and layoffs. It will display the screens that the user visited and other information on where they went. How much logging is accessible depends largely on the application.

The four fundamental logs that IT teams need to frequently monitor and manage are these four. It makes sense to enable logging features for a system if it has previously caused you issues so that you can keep track of errors and gain knowledge from them. Edge cases can always appear out of the blue, so knowing that you are monitoring that log data will help you and your IT staff examine and assess any given circumstance swiftly.

What are the Stages of the Log Management Life Cycle?

Keep in mind the various stages of effective log management while assessing log management systems.

Implementing log management is useful and common for a number of reasons, not the least of which is to analyze network security events for forensics and intrusion detection. While the best-run IT shops are aware of the significance of logging for system and application management, auditing and compliance are becoming equally important as traditional security requirements.

The log management process has numerous unique phases, depending on the reason for logging: policy design, configuration, collection, normalization, indexing, storage, correlation, baselining, alerting, and reporting. Stages of the Log Management Lifecycle are outlined below:

-

Policy Design: Determining what to audit and notify is known as "policies definition". Is compliance auditing, operations, and application management, or security event detection of interest to your company? Will you audit network devices and applications in addition to desktops and servers?

-

Configuration: You must specify what log events will enable you to accomplish those objectives after deciding what and why you want to audit. Although I was unable to discover a system that was as comprehensive as required, many log management suppliers offer "suites" or "packages" that make an effort to provide predetermined, built-in configurations to fit different purposes. Each user will need to assess what the product can currently collect and warn on before defining extra capture to meet their environment's needs. Configuration is the process of converting your audit policies into information capture that can be used for action.

-

Collection: Sending log message events from clients to the log management server is part of the process of collecting data. The majority of solutions offer agentless data gathering or demand that client events be sent to the server. In situations where agentless collection is not appropriate, the majority of log management packages include agent software to help with data collection.

-

Normalization: As collected data enters the data stream, it is frequently processed and divided into various data fields. Structured data that has been parsed is often simpler to index, retrieve, and report on. Raw or unstructured data, commonly referred to as unparsed data, can typically be acquired, but it is more difficult to index, retrieve, or report on. In order to access information, administrators frequently have to develop their own parser or treat unstructured data as a single data field while using keyword searches.

The process of converting several representations of the same sorts of data into a common database's equivalent format is known as normalization. This might entail converting the reported event time in a log management database to a standard time format, like local time or Coordinated Universal Time. It can entail converting IP addresses to host names and other efforts to bring separate information closer together. It is beneficial to have more normalized and processed data. To ensure that a product captures the bulk of the log data in your environment, pay attention to the number of parsers it includes while evaluating potential purchases.

-

Indexing: Data must be indexed as it is saved in order to maximize data retrieval for search queries, filters, and reporting. Although some providers will index unstructured data for quicker retrieval, indexing uses parsed data.

-

Storage: Data that has been captured must be kept in medium- or long-term storage. All devices save data to local hard disks, and some can store data on external storage systems like SAN, NAS, and other similar systems. Event messages can be exported and stored for future retrieval in all of the tested products. Make sure that the solution you are considering cryptographically signs all stored messages if you are concerned about the chain of custody standards set down by law.

-

Correlation: Correlation is the process of identifying a single occurrence by combining events from the same or distinct sources. Some log management tools, for instance, can identify a packet flood or a password-guessing assault rather than just logging numerous dropped packets or failed login attempts. Correlation reveals the sophistication of a product. Security Information and Event Managers (SIEM) are log management tools that excel at correlation. Many of the reviewed products integrate SIEM capabilities with log management (log collecting, storage, querying, and reporting); however, only their log management functionality was assessed.

Note that precise time stamps must be present on every piece of incoming log data for centralized log management to function properly. Ensure that the time and time zone are accurate for every client that is being watched. This will be useful for forensic analysis, legal purposes, and reporting.

-

Baselining: Baselining is the act of establishing what is typical in a given environment so that only anomalous patterns and events will trigger alerts. Every network environment, for instance, experiences several unsuccessful login attempts each day. How many failed login attempts are typical? How many failed login attempts in a specific time frame need to be flagged as suspicious? When levels reach particular criteria, some log management tools will monitor incoming message traffic and assist in setting warnings. You must if the product is unable to.

-

Alerting: It's crucial to alert a reaction team when a crucial security or operational event occurs. The majority of systems allow for SNMP alerting and email, but a few also allow for paging, SMS, network broadcast messages, and Syslog forwards. A service ticket can be created and routed automatically with the help of a few tools that connect with well-known help desk software.

The majority of solutions enable this capability, which is essential for alerting thresholds to prevent multiple, continuous alarms from occurring from a single causal event. For instance, you don't want to receive a single, ongoing port scan alert 1,000 times across several ports. The response team should be able to respond with just one alert.

What are the Advantages of Log Management?

The DevOps, IT, and security departments of a business are able to use the vast amount of raw data generated as logs thanks to log management. The advantages of properly implemented log management are as follows:

- Improved communication across several teams: Log analysis tools assist in identifying critical system faults or patterns and swiftly resolving them because many departments rely on IT resources to carry out business-critical tasks. A proactive approach to problem identification and troubleshooting is taken by log analyzers, which lowers service interruptions and downtime by maintaining SLAs between IT teams and other departments or customers.

- More accurate root cause analysis: When an application experiences problems, log data aids in locating the root cause. When the system throws an exception, the entire stack trace is often recorded in the application's error log. This information enables engineers to locate the problematic method calls and identify the specific line of code that resulted in the issue, making it easier to analyze and reproduce the problem. Effective root cause analysis is a key component of a successful incident response strategy because it helps responders understand the problem and identify a long-term fix. Thus, log data contributes to the decrease in Mean Time to Resolution (MTTR), a crucial incident response statistic. Reducing MTTR lessens how accidents affect end users. Additionally, developers can devote more time to developing innovative features that will increase the appeal of the product to customers.

- Meeting regulatory requirements: Along with internal regulations, many businesses are required by law to abide by data security and privacy laws, including HIPAA, PCI DSS, and GDPR. The right log management tool assist you in meeting regulatory requirements by rewriting and masking sensitive information in logs, guaranteeing that customer data is retained securely, and limiting access to sensitive data to only those who are authorized to view it. Furthermore, thorough event log files serve as the only reliable source of information regarding the security of your software and the dependencies among your apps and services, as well as the kinds of data they have access to. Using a log management application, you may track your advancement toward these objectives continuously.

- Analysis of application usage: While log data is useful for identifying anomalies, log management offers additional advantages. Developers may use log data, for instance, to completely comprehend how users engage with an app. The request logs of a web application can reveal important organizational trends and patterns, such as the times of day when a web application receives the greatest traffic. For security purposes, audit logging enables the analysis of user behavior inside an application. The audit log of a system often keeps track of login and logout procedures as well as when and how data is changed within the system. Having a mechanism for identifying, tracking, and (potentially) undoing unwanted data modifications gives organizations a significant advantage. In other words, companies can employ this technique to increase security and lessen the impact of an occurrence.

What are the Challenges of Log Management?

The complexity of log management has risen for many organizations due to an explosion in data caused by the spread of connected devices and the move to the cloud. The top log management issues that IT teams are now facing, along with Log Management solutions, are listed below:

-

Cost issues with the cloud: IT teams today struggle to properly size their log storage requirements due to the variety of data sources they must manage; this frequently necessitates dynamic provisioning and de-provisioning. Some big businesses store petabytes of data logs, making logging a storage-hungry operation. Additionally, having too much data adds complexity and doubles the difficulty of solving problems. An intelligent log management platform with analytical capabilities should be utilized to assist in the intelligent observation of massive amounts of data in order to detect anomalies more quickly.

Disabling logs, removing them too soon, or purging them all at once could damage your capacity to be observed. Use open-source tools and offline cold storage to store, handle, and retrieve (rehydrate) data as needed. Make sure you have a solid audit trail and a minimum 30-day cache of searchable, immediately available log systems, and archive the rest.

-

Technical difficulties: The 3Cs: context, correlation, and cloud are used to categorize technical issues in log management. Context is the first issue, requiring human interaction to extract meaning from a large collection of logs.

The capacity to connect different logs to gain insights is called correlation, which comes in second. A thorough log analysis tool that can comprehend systemic events and discover faults comprehensively can help to obtain the optimal log correlation. Additionally, log correlation aids in avoiding false positives, prioritizing risk-based warnings, and more thoroughly examining failure causes.

Depending on how crucial the business is, IT teams must save the best logs for a usual duration of 30 days or more in order to achieve successful log correlation. Logs must always be re-indexed (also known as rehydrated). Retrieving old logs from archival storage and indexing them once again to make them searchable is the process of re-indexing. The third issue is the expense of storing logs on the cloud.

-

Problem-solving difficulties: If logs are not managed properly, it can be difficult to pinpoint the exact source of performance issues right away. Finding out whether an infrastructure flaw, a trace fault, or a transaction problem caused an issue is the first stage because more than one parameter may have contributed to it.

A strong approach to problem-solving would include fine-grained log analysis. Consider the case where a website is unavailable. In that instance, it's critical to figure out right away whether the app server, database server, or CPU, memory, or disk utilization issue is to blame in order to clearly pinpoint the main cause. You should study service maps to dig down to the precise composition of its cluster or port level in order to enable accurate log analysis to pinpoint the underlying cause. To ensure accuracy and speed in root cause analysis, a complete, user-friendly log management solution with trained and experienced personnel is required.

-

Cutting through the clutter: In the age of hybrid clouds, data explosions, microservices, and distributed, sophisticated infrastructure tiers that collaborate to deliver software services, logging demands even more relevance. Not always, more log data is better. Context is necessary for IT teams to manage the log overflow.

Additionally, log clutter can push up the cost of cloud storage. As a reflexive step, many IT teams may purge sizable portions of log data when this occurs, potentially erasing crucial log evidence. Additionally, unmanaged log congestion makes real-time monitoring more difficult and decreases operational effectiveness. Additionally, log clutter results in aggregation problems, a lack of clarity, and diluted alerts.

-

Operational difficulties: Data from dispersed systems that are cross-linked may contain a wealth of context. Dynamic components, like containers, are distinct environments where processes can be generated and deleted as necessary. It is difficult to handle all logs in a single location due to the constant flow of data generated by complicated IT setups. Additionally, it makes it more difficult to identify certain logs when troubleshooting, which could negatively impact the MTTR statistic. Additionally, gathering logs in a real-world setting is still more difficult. A complete log management system is necessary as a result.

-

Accessibility issues: IT teams should make sure that logs can be automatically discovered so they can be collected and categorized in a log management platform. It is essential to make sure that logs are properly categorized, timestamped, and indexed in order to allow for improved access. You can browse the saved logs thanks to a query-based search's centralized accessibility.

-

Difficulties with Automation: Not all automated processes can be completely left without personal involvement, particularly when it comes to log management. Even though a large portion of log accumulation now occurs automatically, you still need context and discernment from the correct human intelligence to delve deeply into logs and develop automated remedies. A hands-free method is therefore harmful to automation. Ironically, automation with logs requires prompt expert involvement in order for the system to improve, learn, and function better in order to prevent false alarms and increase accuracy levels.

Logs are essential for the overall performance of an IT team. Log analysis provides unmatched visibility into the performance and overall health of your IT infrastructure, improves processes, and helps you mitigate problems. Your products and services can be constantly improved by basing important decisions on this information. IT teams require a comprehensive cloud-based log management platform that gives them instant access to observability's power.

What are Log Management Tools?

Tools for log management are items that collect, organize, examine, display, and get rid of log data. Log management technologies provide dynamic performance monitoring and real-time alert management to provide companies with more visibility and understanding of their systems' security, efficacy, and health. Data from logs can be cleaned, converted, or modified in other ways using log management software.

Tools for log management are beneficial because they let users access detailed records of their data, system alarms, and asset performance from a centralized platform. Log management systems help identify and address potentially problematic data occurrences, which is very helpful in network security applications. Additionally, visualization solutions for log management produce clear network maps and data utilization graphs.

In terms of event and alert management, log management tools have characteristics in common with Security Information and Event Management (SIEM) Software and Event Monitoring software products. Log management technologies, however, stand out because they provide thorough, automatic functionality for log aggregation, cleaning, and storage. Best Log Management Software are listed below:

-

SolarWinds Log Analyzer: You can effectively troubleshoot with SolarWinds Log Analyzer since it conducts log aggregation, tagging, filtering, and alerting. Event log tagging, robust search & filtering, a real-time log stream, platform integration for Orion, alert integration for Orion, and log & event collection and analysis are some of its features. Ideal for medium-sized to large organizations. Features of SolarWinds Log Analyzer are as follows:

- With the aid of the log monitoring tools, it will assist you in doing root cause investigation.

- You can use the tool to conduct searches while applying filters and a variety of search parameters.

- It will offer a live, interactive log stream.

- color-coded tags for data logging.

-

ManageEngine EventLog Analyzer: You receive a complete log management tool with EventLog Analyzer that accomplishes a number of essential tasks. It primarily enables you to safely archive the gathered logs. It accomplishes this by utilizing sophisticated hashing and time-stamping methods. The program is useful for maintaining the integrity of your files, protecting your web servers, and keeping an eye on network gadgets. Best for Managing Logs for Web Servers, Workstations, Perimeter Devices, Application Servers, Databases, etc. Features of ManageEngine EventLog Analyzer are listed below:

- Receive an immediate warning when significant changes are made to crucial files and folders.

- Utilize the global threat intelligence database to quickly identify malicious IP traffic that is entering your network.

- High-speed log searches can be carried out using Boolean search, group search, and range search.

- Real-time event log data correlation.

-

Sematext Logs: You can gather, store, index, and perform real-time analysis of logs originating from a wide range of data sources using Sematext Logs, a centralized log management system that is available in the cloud or on-premises. For DevOps who want to troubleshoot more quickly, it has a live log stream, alerts, and strong searching and filtering options. Best for businesses of any size. Features of Sematext Logs are given below:

- correlation of logs in real time with measurements and other events.

- exposes the Elasticsearch API, making it simple to use with a wide range of well-liked log shipping tools, libraries, and Elasticsearch-compatible systems.

- the capacity to manage vast amounts of data.

- Kibana has been added in addition to the Sematext UI.

- You have a lot of control over prices with flexible app-scoped pricing based on the plan, volume, and retention choice, which doesn't charge overage fees.

- Simple installation thanks to their open-source, lightweight log agent and data shipper for server, container, and application logs.

-

Datadog: Datadog is a crucial monitoring solution for environments using hybrid clouds. Datadog offers end-to-end visibility across the dynamic, high-scale infrastructure by gathering measurements, events, and logs from more than 450 technologies.

Datadog log management accelerates troubleshooting efforts with comprehensive, connected data from throughout your environment, thanks to dynamic indexing strategies that make it economical to gather, evaluate, and store all of your logs. Features of Datadog are as follows:

- For rapid log searching, filtering, and analysis as well as for in-depth data research, use Datadog.

- Use facet-driven, simple navigation to visualize and analyze gathered logs without the need for a query language.

- With auto-tagging and metric correlation, view log data in context.

- With machine learning-based monitors and detectors, quickly identify log trends and errors.

- With Datadog's drag-and-drop features, real-time log analytics dashboards may be created in a matter of seconds.

- With Logging Without Limits, you may send, process, and keep track of every log generated by your apps and infrastructure while only paying to index the high-value logs you actually need.

- With more than 450 vendor-supported integrations, including Logstash, Fluentd, Elasticsearch, AWS Cloudwatch, NGINX, and others, collecting logs is simple.

-

Site24x7: The log management tool from Site24x7 gathers, combines, indexes, examines, and manages logs from various servers, programs, and network gadgets. It simplifies troubleshooting by automatically identifying logs on your server, classifying them depending on their nature, and arranging them for simple indexing using a simple, query-based interface. Features of Site24x7 are listed below:

- Fix problems including application exceptions, failed file uploads, failed external database calls, and dynamic user input verification.

- Utilize a single interface to manage logs from several cloud service providers, including Amazon Web Services and Microsoft Azure.

- Utilize log collectors like Logstash and Fluentd to upload logs.

- Increase the speed of your troubleshooting using keyword-based searches and visual tools like graphs and dashboards that show you how many times a given log has been indexed.

- Watch for log faults and get threshold-based notifications via voice calls, SMS, email, and other third-party communication platforms that your company utilizes.

-

Splunk: Both Splunk Cloud and Splunk Enterprise provide a free trial. Using the free Splunk plan, your daily indexing volume is limited to 500 MB. Splunk can interpret machine data and generate results. Indexing machine data, search/correlate & explore, drill-down analysis, monitor & alert, reporting & dashboard are all elements of Splunk log management.

Every data the machine generates is collected, searched, saved, indexed, correlated, viewed and analyzed. Ideal for medium-sized to large organizations. Features of Splunk are given below:

- The premium plans give you complete access to the developer environment's APIs and SDKs.

- Any machine data can be collected and indexed.

- With a cloud-based solution, it is able to store data for up to 90 days.

- It features real-time analysis, search, and visualization.

-

LogDNA: A centralized log management solution is offered by LogDNA. It offers on-premises, multi-cloud, and cloud deployment options. Real-time log aggregation, monitoring, and analysis will be carried out by this software. It contains modern UI features, quick search & filtering, and intelligent alerts. Ideal for medium-sized to large organizations. Features LogDNA are as follows:

- Using any platform, LogDNA can aggregate, monitor, and analyze logs in real-time.

- It contains capabilities like automatic field parsing, archiving, and real-time alarms.

- It is compatible with all data volumes.

- The Privacy Shield has certified LogDNA.

- More than 100 terabytes of data per client per day may be handled, and it can handle 1 million log events per second.

-

Fluentd: An open-source solution called Fluentd will serve as a data collector for a single logging layer. It will function by providing a consistent logging layer between the data sources and the backend systems. Ideal for medium-sized to large organizations. Features of Fluentd are listed below:

- It will offer the OS's standard Memory allocator.

- It offers 40 MB of RAM, the C and Ruby languages, and self-service setup options.

- As it was created in a mix of the Ruby and C languages, it will need a little amount of system resources.

- With its more than 500 plugins, Fluentd can connect to a wide range of data inputs and outputs.

- Community members favor it.

-

Logalyze: Logalyze is an open-source alternative to Fluentd for log management. It can be used as a network management tool, centralized log management system, and application log analyzer.

Event logs are gathered from distributed Windows servers, while Syslogs are gathered from distributed Linux, UNIX, or AIX systems. It has the ability to gather network components like switches, routers, firewalls, etc.Ideal for medium-sized to large organizations. Features of Logalyze are as follows:

- The collectors, Parser and Analyzer Modules, Statistics and Aggregation, Events and Alerts, and Logalyze SOAP API are all features of the Log Analyzer Engine.

- The administrator interface includes tools such a log browser, statistics viewer, report generator, and admin functions in addition to a web-based HTML and multilingual user interface that is customizable.

- It can analyze logs from unique business applications.

-

Graylog: Terabytes of machine data can be captured, stored, and subjected to real-time analysis with Graylog's centralized log management solution. Terabytes of information can be accessed from numerous log sources, data centers, and locations. It can be scaled horizontally in your data center, the cloud, or both. Ideal for medium-sized to large organizations. Features of Graylog are given below:

- A quicker alert on cyber dangers.

- The data will be rapidly analyzed, and an effective incident reaction will be given.

- It offers an easy-to-use user interface that will make it easier for you to explore, alert on, and report on data.

- Data gathering, organizing, analysis, extraction, security, and performance are among its features.

- It is skilled in matters such as audit logs, archiving, role-based access control and fault tolerance. Security & performance optimization.

-

Netwrix Auditor: Security threats can be found with Netwrix Auditor. It is software for IT audits. The Windows operating system is supported. For a variety of IT systems, including Active Directory, Windows Server, network devices, etc., Netwrix Auditor can be used. Its usage areas are for remote access monitoring. Ideal for medium-sized to large organizations. Features of Netwrix Auditor are listed below:

- You will have complete visibility into configuration changes, unsuccessful logon attempts, scanning threats, and network device hardware issues.

- Devices from Cisco, Fortinet, Palo Alto, SonicWall, and Juniper will all show signs of hardware issues.

- Additionally, SharePoint, Office365, Oracle Database, SQL Server, Windows Server, VMware, and Windows File Servers are supported by Netwrix auditor.

- It will send notifications for important occurrences like a modification to the device's setup, etc.

What is the Difference Between System Logs and Audit Logs?

Audit logs differ from standard system logs (such as error logs, operational logs, etc.) in terms of the data they include, their intended use, and their immutability. Whereas standard system logs are intended to help developers solve faults, audit logs assist organizations in documenting a historical record of activities for compliance and other business policy enforcement. If a log contains the aforementioned data and is used for auditing, it can be categorized as an audit log from any network device, application, host, or operating system. Audit logs are designed to be immutable, meaning that no user or service may change audit trails. This is because compliance frameworks typically demand that enterprises adhere to long-term retention requirements.

The system log is complemented by the security audit log. Both are instruments used to maintain a record of operations carried out in SAP systems. Nevertheless, their goals and methods are marginally dissimilar. These logs are compared in the table below.

| Category | Security Audit Log | System Log |

|---|---|---|

| Objective | Keeps track of security-related data that can be utilized to reconstruct a sequence of events (such as failed logon attempts or transaction beginnings). | Keeps track of data that could indicate system issues (such as database read errors and rollbacks). |

| Audience | Investigators | IT professionals |

| Utilization Flexibility | The security audit log can be turned on and off as needed. You don't have to audit your system every day, even if you might want to. For instance, you could want to turn on the security audit log for a while before a planned audit and turn it off in between audits. | The system log is continually required. No, you don't turn off the system log. |

| Log accessibility | Local logs kept on each application server are the audit logs. Contrary to the system log, the audit logs are kept on a daily basis by the system, and you must manually archive or delete the log files. This increases the time frame for available records and allows you to access logs from earlier days. | System logs come in both local and central varieties. On each individual application server, local logs are kept. Because these files are circular, they are filled from the beginning once they are full. These logs are available for a finite amount of time and in finite sizes.You can keep an overall log. The core log isn't entirely platform independent for now, though. It can only currently be kept up to date on a UNIX platform. Additionally, the primary log is no longer retained forever. |

| The Management of Sensitive Data | Contains sensitive personal data that might be covered by data protection laws.Before turning on the security audit log, you must pay particular attention to data protection laws. | Contains no private information. |

Table 1. Security Audit Log vs System Log

What is the Difference Between a Log Management System and SIEM?

By lowering the attack surface, identifying threats, and speeding up response times in the case of a security incident, log management software and Security Information and Event Management (SIEM) leverage the log file or event log to enhance security.

Simply put, the fundamental distinction between the two is that log management systems are primarily intended for gathering log data, whereas SIEM systems are first and foremost security applications. Although a log management system can be utilized for security, the complexity outweighs the benefits. A log management system is the solution to use if you want to collect all of your logs in one location. Select a SIEM system if you need to use logs to manage security for a sizable or varied IT infrastructure.

SIEM is a fully automated system, whereas log management is not; this is an additional essential distinction. SIEM provides real-time threat analysis, whereas log management does not. The solution you select for MSPs will primarily depend on what you have the resources, staff, and time to complete. The ability to evaluate your customers' logs and create a cybersecurity strategy using that data is more crucial than anything else.

Solutions for log management and SIEMs can frequently complement one another and occasionally compete. However, this varies according to the kind of solution being thought about. Given that they both process event data, SIEM and log management technologies frequently serve the same purposes. Others want the freedom to create their own SIEM utilizing a contemporary log management platform, which frequently necessitates having a solid grasp of the platform's fundamental ideas and inner workings.

What are the Best Practices for Log Management?

In today's digital world, there is so much data being produced that it is unfeasible for IT workers to manually maintain and analyze logs across a vast tech environment. As a result, they need tools that automate crucial steps in the processes of data collecting, processing, and analysis, as well as an advanced log management system.

Here are some important factors that IT companies should think about before purchasing a log management system:

-

Start utilizing structured logging: Writing event logs into a log file in plain text is the conventional method of logging. This approach has a flaw in that plain text logs are an unstructured data format, making it difficult to filter or query them to draw out insights.

Digital merchants should use structured logging instead of traditional logging and write their logs in a format like JSON or XML that is simpler to scan, analyze, and query. Both humans and machines can easily read logs written in JSON, and structured JSON logs can be simply tabulated to support filtering and queries.

Time is saved, and insight development is accelerated through structured logging.

-

Give automation tools top priority to lessen the IT workload: Log management takes a lot of time and could use up resources within the IT department. Advanced tools can automate a lot of routine data collection and analysis chores. To reduce manual labor throughout this process, organizations should give automation features top priority in any new log management technologies and take into account updating legacy solutions.

-

Give log messages context and meaning: Log messages should provide relevant details about the event that caused the log, as well as additional context that can aid analysts in understanding what occurred, identifying connections to other events, and identifying potential problems that need more research.

Meaningful logs are detailed and informative, giving DevSecOps teams pertinent information that helps speed up the error-detection process. Fields like these can provide log messages with useful context:

- Timestamps: By knowing the precise day and hour an event occurred, analysts can filter and search for additional occurrences that also occurred during that time period.

- User Request Identifiers: The eCommerce web server receives requests from client browsers that have a special identifier code. This code may be recorded in logs for events brought on by the request.

- Unique Identifiers: Individual users, products, user sessions, pages, shopping carts, and other unique identifiers are assigned by online retailers. These facts can be recorded in event logs, which will give valuable context and perception into the condition of the application at the time of the incident.

-

Use a centralized system for enhanced security and better access: Not only does centralized log management enhance data access, but it also significantly enhances the organization's security capabilities. Organizations can identify anomalies and react to them more rapidly by storing and connecting data in a central location. So, a centralized log management system can aid in minimizing the vital window during which hackers can move laterally to other portions of the system, or what is known as the breakout time.

-

Refrain from logging unnecessary or sensitive data: Both deciding what should be included in log messages and deciding what can be excluded are crucial decisions. Logging unnecessary data that hinders diagnostics or root cause analysis lengthens time to insights, increases data volumes, and raises expenses.

Additionally, it's crucial to avoid logging sensitive data, particularly proprietary information, source code for applications, and personally identifiable information (PII) that may be subject to laws and standards governing data protection and security, such as the European GDPR, HIPAA, or PCI DSS.

-

To better manage volume, develop a customized monitoring and retention policy: Depending on their specific requirements and conditions, businesses should establish distinct retention rules for various log types. Organizations must use judgment when deciding what information is gathered and how long it should be kept, given the volume of data being created. Businesses should conduct an enterprise-wide analysis to identify the inputs necessary for each function.

-

Record Logs from Various Sources: DevOps teams may be able to collect logs from tens or even hundreds of different sources as IT infrastructures get more complicated. Even though not all of these logs may be considered crucial, gathering the appropriate logs can offer important information and helpful context for identifying and diagnosing faults.

-

Make use of the cloud for increased flexibility and scalability: Organizations should think about investing in a contemporary, cloud-based log management system given the constantly expanding data landscape. Utilizing the cloud increases flexibility and scalability, making it simple for enterprises to increase or decrease their processing and storage capacity in response to changing needs.

-

Index Logs for Analytical Querying: Enterprise IT environments become more complicated as a result, and they produce enormous amounts of log data that can be difficult to query. As a result of indexing your logs, enterprise DevOps and data teams may more easily solve issues and derive value from their logs thanks to a new data structure that is designed for query efficiency.

DevOps teams may decide to index their logs using log indexing engines like Elasticsearch or Apache Solr, but when analyzing logs at scale, these engines may have performance challenges or data retention trade-offs.

-

Set Up Alerts for Real-Time Log Monitoring: Issues in the production environment must be found and dealt with quickly when the stakes are high. This couldn't be more true than on Cyber Monday, when even a little outage in service could cost thousands of dollars in lost sales.

DevSecOps teams can set up their log management systems or SIEM tools to watch the stream of ingested logs and alert on known mistakes or odd occurrences that could indicate a security incident or a performance issue with the application.

Direct routing of alerts to issue response teams' mobile devices and/or Slack accounts allows for quick error discovery, diagnosis, and resolution while minimizing the impact on the user experience.

How to Manage Zenarmor Logs?

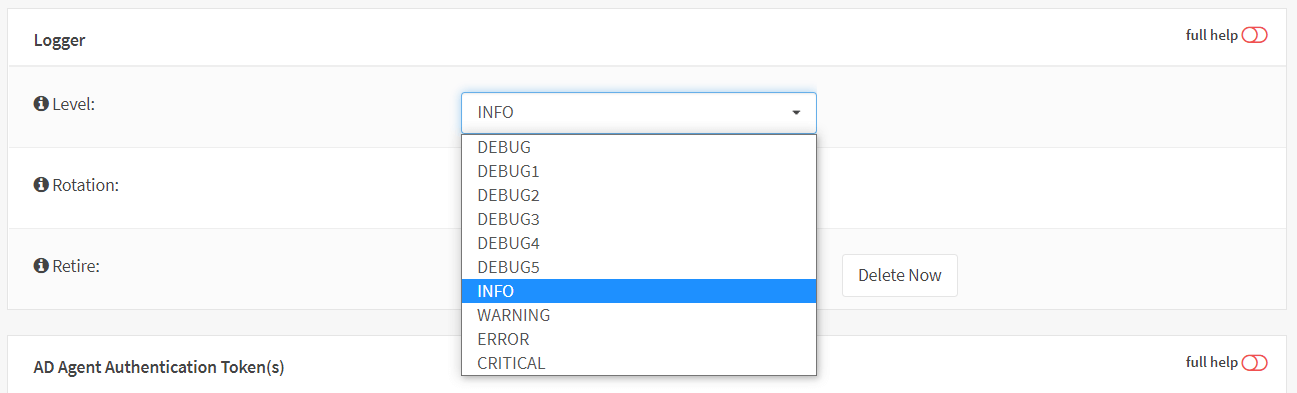

Zenarmor NGFW allows you to select the log level, rotation, and retirement of log files. You can manage Zenarmor logs by navigating to the Zenarmor > Configuration > General and scrolling down to the Logger pane on your OPNsense web UI.

Below are the five categories of Zenarmor Log level:

- INFO: INFO is the standard log level signifying that an event occurred and the application entered a particular state.

- CRITICAL: Indicates that the application has encountered an event or entered a state in which a vital business function has ceased to function.

- ERROR: The application has encountered a problem that prevents one or more functions from operating correctly.

- WARNING: indicates that an unanticipated event has occurred within the application, a problem, or a situation that may disrupt one of the processes. However, this does not indicate that the application failed.

- DEBUG: The DEBUG log level should be utilized for diagnostic and troubleshooting information. There are five available levels for debugging:

- DEBUG

- DEBUG2

- DEBUG3

- DEBUG4

- DEBUG5

Figure 1. Zenarmor Log levels on OPNsense

There are 3 options for Zenarmor log rotation and the default is 1 Day:

- 1 Hour

- 1 Day

- 1 Week

A system's log files could ultimately occupy all of its available disk space if they are not regularly rotated and deleted.

Using the Retire option, you can select a deletion period for the recordings. By default, log files are routinely purged after three days. In an emergency, you can delete log files immediately by clicking the Delete Now icon.